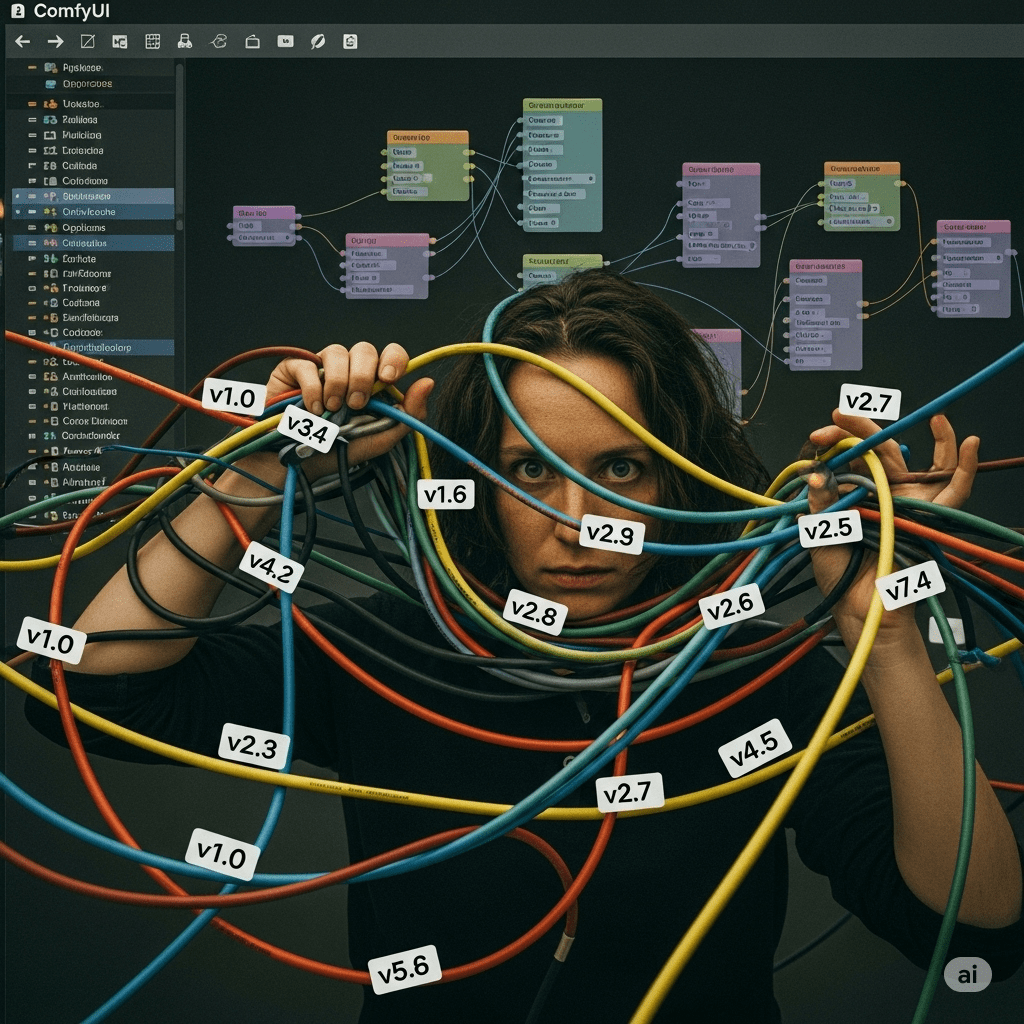

Version management is painful with ComfyUI. It is an open source project with lots of different people contributing different custom nodes and models you can add to your installation, which is both fantastic (so much is out there!) and painful because as people make changes they are not always backwards compatible. This means when someone shares a workflow, you also need to know not only what custom nodes they have loaded, but also which versions.

In this post I explore how I install ComfyUI based on Git, and can then share the exact versions of all the extensions I installed as a result.

My First Experience with ComfyUI Version Hell

I am relatively new to ComfyUI, but my first installation took a long time to get working due to trying to use a workflow someone else had shared. They had developed their own custom node and used it in the workflow, but the video was 6 months old. In the meantime, they had renamed their node. As a newcomer it took me a while to understand what was going on. But it also caused problems because I downloaded a prebuilt version of ComfyUI for Windows. ComfyUI itself changes along with node names included.

Half way though my pain, I tried adding ComfyUI Manager to the mix. It lets you install lots of custom nodes. For me, it didn’t. That does not mean the tool itself is not useful, but rather starting with a messed up build is not magically fixed by adding another tool.

My conclusion: Prebuilt versions of ComfyUI might sound like they reduce pain, but the rapid changes going on make me question how much they help.

PytTorch and RTX 5090

Another problem I faced is I have an RTX 5090. Using the free level of ChatGPT for advice, it was trained before the 5090 was supported. So it basically kept giving me bad advice. To get the maximal speed, I found the following commands useful to upgrade PyTorch to a version that supports the RTX 5090. It removes the old version then adds the correct version for my RTX 5090 (12.8).

python -m pip uninstall torch torchvision torchaudio -y

python -m pip install torch torchvision torchaudio \

--index-url https://download.pytorch.org/whl/cu128Git to the Rescue

So I tried a new approach. I decided I would use git to get all versions of the base ComfyUI distribution AND all custom nodes. Git maintains version histories. That means to share a configuration I can share the exact versions in git repos, reducing the risk of someone copying my setup and failing. I am now using:

- A standard installation of python

- Virtual python environments with ‘venv’ so each installation of ComfyUI has its own particular versions of dependencies loaded

- Git to checkout the base ComfyUI installation and all custom nodes

I have multiple installations as I learn, so I can try a new custom node in isolation without messing up my main installation. I also keep track, per installation, how I constructed the installation so I can rebuild it if required.

Creating a new ComfyUI Deployment

I am on Windows, but I grew up as a Unix developer. So I use the ‘git bash’ shell for Windows (comes with Git for Windows) rather than Command Prompt or PowerShell. So the following is what I do, ensuring I am using the RTX 5090 version of PyTorch. Note: I have Python 3.12.10 installed.

Important notes before you copy this verbatim without thinking:

- You are assumed to have Git for Windows (which includes “git bash”) already installed.

- Start with a new git bash windows to ensure no venv environment variables are still set from a previous run.

- The follow contains steps specific to an RTX 5090 (the –nvidia option and the PyTorch remove and install lines)

- Remove the –listen 0.0.0.0 if you are security concious.

- The ‘comfy’ command does a git clone if the latest release. There are command lines to specify a specific version.

To set up a new installation with an RTX 5090, I open a new git bash window and run the following commands.

mkdir ComfyUI-new

cd ComfyUI-new

python -m venv cuienv

source cuienv/Scripts/activate

python -m pip install comfy-cli

comfy --workspace=ComfyUI --skip-prompt install --nvidia

cat <<EOF >run.sh

#!/bin/bash

source cuienv/Scripts/activate

exec python -s ComfyUI/main.py --windows-standalone-build --listen 0.0.0.0

EOF

python -m pip uninstall torch torchvision torchaudio -y

python -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu128

./run.shThe end results is I have a ComfyUI-new directory (call it whatever you like) containing a run.sh shell script, a cuienv directory holding the python libraries etc for this installation, and a ComfyUI directory containing the ComfyUI applications (with ComfyUI/models, ComfyUI/custom_nodes etc directories).

To start it up, I open a git bash window, cd to the ComfyUI-new directory, and execute ./run.sh. It loads the virtual environment and starts up ComfyUI. The –windows-standalone-build adds some special Windows features, such as immediately opening the UI in a browser for you, and the –listen 0.0.0.0 I added (remove it for better security) because I have multiple machines on my home network, and ipads, that I can connect to from. E.g. I can check the status of the ComfyUI queue from bed with my phone.

To stop ComfyUI, I hit control-C in the git bash window to kill the shell script.

Custom Nodes

I then add custom nodes to the installation, using git commands. That is, I cd into the ComfyUI/custom_nodes directory and use git clone to get the files for that extension (when possible). That means I know the exact version of each custom node.

What this allows me to do is then have the following script “report-version.sh”. It walks the tree checking the custom_nodes/ and models/ directories and outputs commands to rebuild the directory structure by adding the git version checksums of the exact version used. It could do with a bit of work (e.g. it does not skip the __pycache__ directory automatically), but the idea is the output is sufficient to rebuild the installation. So I run it periodically and redirect output to “report-version.txt” so if I mess something up, I have enough information to try and go back to a previous version of the extensions. It also generates checksums of model files for the paranoid.

Frankly, I would only suggest this script for developers already familiar with git.

#!/bin/bash

set -e

cd ComfyUI

echo "# Snapshot of current ComfyUI setup"

echo ""

# Function to report git repo status

report_git_repo() {

local dir="$1"

local rel_dir="$2"

cd "$dir"

if [ -d .git ]; then

local repo_url=$(git remote get-url origin)

local commit_hash=$(git rev-parse HEAD)

echo "git clone $repo_url \"$rel_dir\""

echo "cd \"$rel_dir\""

echo "git checkout $commit_hash"

local modified=$(git status --porcelain)

if [ -n "$modified" ]; then

echo "# WARNING: Modified files in $rel_dir"

git status --short | sed 's/^/# /'

fi

echo "cd - > /dev/null"

echo ""

else

echo "# No git repo found in $rel_dir"

echo ""

fi

cd - > /dev/null

}

# Step 1: ComfyUI core version

echo "# ComfyUI core version"

if [ -d .git ]; then

report_git_repo "." "ComfyUI"

else

echo "# Not a Git repository: ComfyUI core version not detected"

echo ""

fi

# Step 2: Custom nodes

custom_nodes_dir="custom_nodes"

echo "# Custom Nodes"

echo ""

if [ -d "$custom_nodes_dir" ]; then

for d in "$custom_nodes_dir"/*/; do

d="${d%/}"

dir_name=$(basename "$d")

report_git_repo "$d" "$custom_nodes_dir/$dir_name"

done

else

echo "# No custom_nodes directory found"

echo ""

fi

# Step 3: Models

echo "# Models (with SHA256 hashes)"

echo ""

model_dirs=(models/checkpoints models/loras models/controlnet models/clip models/vae)

for dir in "${model_dirs[@]}"; do

if [ -d "$dir" ]; then

echo "# Directory: $dir"

find "$dir" -type f \( -iname "*.safetensors" -o -iname "*.pt" -o -iname "*.ckpt" \) | while read -r model; do

hash=$(sha256sum "$model" | awk '{print $1}')

echo "# $(basename "$model") - $hash"

done

echo ""

fi

doneThe following is example output. Note that it generates commands to rebuild the ComfyUI directory structure, but does not set up the python environment.

$ ./report-version.sh

# Snapshot of current ComfyUI setup

# ComfyUI core version

git clone https://github.com/comfyanonymous/ComfyUI "ComfyUI"

cd "ComfyUI"

git checkout 7d627f764c2137d816a39adbc358cb28c1718a47

# Custom Nodes

# No git repo found in custom_nodes/ComfyUI-Distributed

git clone https://github.com/ltdrdata/ComfyUI-Manager "custom_nodes/ComfyUI-Manager"

cd "custom_nodes/ComfyUI-Manager"

git checkout ff0604e3b654c4b032997aeab4458f07513816dd

cd - > /dev/null

# No git repo found in custom_nodes/__pycache__

git clone https://github.com/shadowcz007/comfyui-ultralytics-yolo "custom_nodes/comfyui-ultralytics-yolo"

cd "custom_nodes/comfyui-ultralytics-yolo"

git checkout c48a4aeaa4a1b07c882e3334ed9630084d817fce

cd - > /dev/null

# Models (with SHA256 hashes)

# Directory: models/checkpoints

# DreamShaper_8_pruned.safetensors - 879db523c30d3b9017143d56705015e15a2cb5628762c11d086fed9538abd7fd

# realisticVisionV60B1_v51HyperVAE.safetensors - f47e942ad4c30d863ad7f53cb60145ffcd2118845dfa705ce8bd6b42e90c4a13

# sd_xl_base_1.0.safetensors - 31e35c80fc4829d14f90153f4c74cd59c90b779f6afe05a74cd6120b893f7e5b

# sd_xl_refiner_1.0.safetensors - 7440042bbdc8a24813002c09b6b69b64dc90fded4472613437b7f55f9b7d9c5f

# svd_xt.safetensors - b2652c23d64a1da5f14d55011b9b6dce55f2e72e395719f1cd1f8a079b00a451

# v1-5-pruned.safetensors - 1a189f0be69d6106a48548e7626207dddd7042a418dbf372cefd05e0cdba61b6

# wildcardxXLTURBO_wildcardxXLTURBOV10.safetensors - 276d222ef08a3e8c60c49069bad728b8654b8577e1595859a1feffc67141a408

# Directory: models/loras

# Directory: models/controlnet

# diffusion_pytorch_model.safetensors - 2cd23f9da9f2f24d75ded22c0c2596782312aa0b88e05076b4b8621a0b1fa9d1

# mistoLine_rank256.safetensors - 6c46c871a49bf9e74114f0e1f87d818426203fd62847e78043e4ce2d40834d53

# OpenPoseXL2.safetensors - 5a4b928cb1e93748217900cb66d4135bf70d932d2924232f925910fad9e43a92

# Directory: models/clip

# clip_l.safetensors - 660c6f5b1abae9dc498ac2d21e1347d2abdb0cf6c0c0c8576cd796491d9a6cdd

# t5xxl_fp16.safetensors - 6e480b09fae049a72d2a8c5fbccb8d3e92febeb233bbe9dfe7256958a9167635

# Directory: models/vae

# ae.safetensors - afc8e28272cd15db3919bacdb6918ce9c1ed22e96cb12c4d5ed0fba823529e38

# wan_2.1_vae.safetensors - 2fc39d31359a4b0a64f55876d8ff7fa8d780956ae2cb13463b0223e15148976b

Model Files

Model files (which are large) do not use the above. For now I download and copy the ones I want per ComfyUI installation. Model files are easier in that once released, they do not change. There is no version management problem. But they are big. So copying per installation does consume a lot of disk space. On my list to explore is I believe the ComfyUI configuration file has a setting which lets you create shared model download directory and have all installations point to that one area.

I have not tried this yet due to the luxury of just upgrading to a new desktop with bigger (empty) disks. I am not yet sure whether to use a shared directory, or use Windows symbolic links so I can add references to only the models I need into an installation. For example, I can have a Wan2.1 installation with only the model files for that model, so I am less likely to mix the wrong files together. I want to explore some more before making any recommendations.

Wrapping Up

My first experience of ComfyUI using a prebuilt distribution and old YouTube videos was painful. The project changes rapidly, as does the whole AI field. That is just the pain of being on the bleeding edge. I wrote up this post to explore how to share an exact distribution and versions of all the code, so tutorials might not date as badly. I would rather have a more standardized way, but leveraging git to get the code of all your custom nodes does make it easier to create new installation directories of a particular ComfyUI version. I am planning on giving this a go in I start blogging on some experiments I try.

And of course, this may already be a solved problem – I just have not found it yet! Lol!