Interested in high quality 3D product configurators for your ecommerce site? The Omniverse team livestream today recapped the recent GTC conference, and over half the video was talking about product configurators including web delivery!

What is Omniverse?

Sometimes it’s hard to keep up with everything that is happening with just Omniverse, let alone the rest NVIDIA. Personally I have been building a web app to create a simple workflow for simple screenplay (text) to animated movies — not production quality, more for simple YouTube quality video storytelling. I may never finish, but it’s interesting to learn the technologies. Omniverse has been available for running on your desktop for some time, but I had found it hard to really get into their web plans. With the recent GTC conference, many parts of their vision are starting to fit together in my mind.

If you are not familiar with Omniverse, it is software from NVIDIA for high quality rendering and simulation. They have an application building framework, with prebuilt tools like Omniverse USD Composer. OpenUSD is the 3D file format they built the tool on (Universal Scene Description originally from Pixar), which is gaining traction in the industry as many tools are adding support. For example, it is also used by the Apple Vision Pro.

Omniverse USD Composer is available for download for free for individual use, which provides a desktop app for creating and view USD scenes. Most people when they think of Omniverse think of USD Composer. However, Omniverse Kit is an application framework for building your own apps from the various extensions – USD Composer is assembled in the same way. This means you can, if you want, create your own extensions and then assemble custom apps for your specific needs.

A problem however is this approach requires a modern NVIDIA RTX GPU and uses up a lot of local file storage. For users accessing 3D resources that raised the question of how to get 3D assets to the masses? With my background I am especially interested in web delivery. Zero software install required.

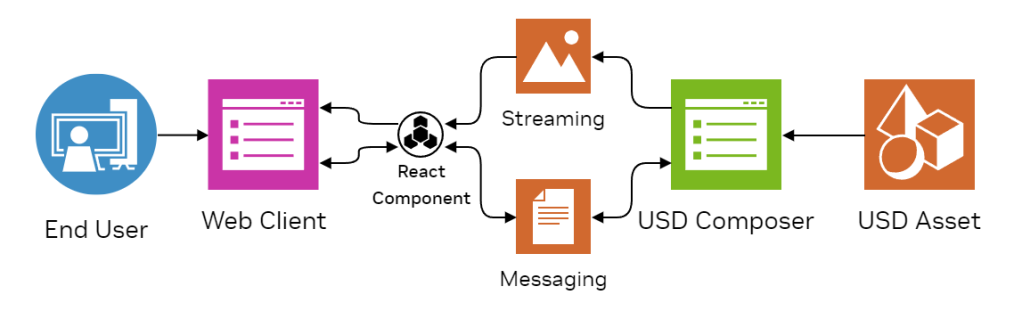

The good news is Omniverse has been releasing different parts of the story as they complete them, including Omniverse Cloud where they have been building out a cloud based solution allowing streaming of rendered results to a web page. This means you can build your Omniverse Kit extensions as microservices, deploy them into Omniverse Cloud, then stream the rendered results to a web view.

Product Configuration with 3D Experiences

NVIDIA recently held their GTC Conference, in person for the first time since COVID. On the livestream today the Omniverse team shared their experiences and highlights from the conference.

What was most of the content on? Product configuration and presentation, streamed to a web browser (and Apple Vision Pro)!

Omniverse is frequently used in the spheres of synthetic image generation, digital twins, and robotics, so as someone with an ecommerce background the stream was unexpectedly interesting.

For example, here is a sample product web page. You can rotate between the views of the product, change the product colors, the size, etc.

The product image streamed from the cloud, dynamically updated as changes are made.

Another example was of a handbag. OpenUSD supports the normal 3D model concepts of meshes (defining the shape of the handbag) and materials (the texture, color, and reflective properties of sections of the mesh). It also has built in the concept of “Variants”. Variants allow the model creator to define variations of the item – such as swapping colors or textures, or even the entire mesh. This aligns well with real-life product variants. Some variants much just be a different color, but other variants might have additional trim. By encoding the variants into the core 3D model, developers building experiences like the above only have to say “give me the black variant” – the don’t have to know if the model structure changed. Good isolation of concerns.

Here is an example of a React app with buttons on the right for the color. Clicking on the button updates the live rendered stream.

NVIDIA ran a Deep Learning Institute (DLI) session at GTC, which should be available online in the near future. But there is also online documentation of how to build a product configurator. It has some details about the architecture of how it is put together. Details are still emerging (or I am just not following the right sources!), but Omniverse Cloud (which includes Omniverse GDN, their graphics delivery network) handles the streaming, messaging, and running of USD Composer in the cloud for you.

During the live stream they showed some of the steps involved. For example, Omniverse has a node based scripting language (OmniGraph), which includes a “On MessageBus Event” node. The following graph shows how the event (streamed from the web app) can change the product variant selection. The React app does not have to know anything about 3D modeling, just pick the variant. (There are hundreds of node types you can use to build up graphs visually instead of with code.)

Some React code snippets – first some components with the list of color names (that have to match what the variant supports).

Then the code to build up a message to send to the streaming service over a web socket. Pretty straightforward.

More detail can be found in their product configurator sample documentation, including a download ZIP of the full React application if you want to poke around. For example, here is the code to send the message.

But 3D models are expensive to create right? Yes. Yes they are. But I think there are two emerging answers to this challenge.

- There are apps that can scan real world 3D objects and convert them into models. I don’t think they are product quality yet, but they are improving and may get there in the coming years.

- Manufacturers may be able to provide the models, especially those using CAD software as part of their process.

Here is an example of a car configurator. Where did the model come from? The CAD software the manufacturer used to design the car! Many packages now support OpenUSD as an export format, meaning the level of detail in the car is amazing. Every knob and button on the dash of the car is from the original CAD model. This means all the lighting gets all the shadows and reflections correct. You can even zoom in and see the detail on the tires.

And of course, you can change the wheels, change the paint color, change the trim, change the environment … all with high quality rendering. The above image is rendered, not a photo.

More fun? Omniverse also announced Apple Vision Pro support. By positioning a real world chair appropriately, you can sit “inside” the car, with high quality imaging of what the car is like. “It is very, very strange that you walk around virtual doors,” — Jensen Huang (CEO).

As 3D becomes more commonplace during product design and manufacturing, standards, such as OpenUSD, will make it more feasible/cost effective to have high quality 3D modeling of products.

Wrapping up

Do you really need live streaming of all products? No! For the handbag example if you are just showing 3 static shots with 3 color variants, just take 3 photos and be done with it!

But these are live 3D models. That means you can start building new experiences. Start with a character, start dressing them up with multiple products available for sale, let the shopper add accessories, then change the environment from walking down the street to at a dance club — all with high quality rendering with colors and textures of the product, even if you zoom in. It is this more interactive and immersive experience that these technologies will make possible.

3D is not new – it has been in video games for years. It is the recent improvements of quality with physically based materials and lighting that I find exciting. I still remember conversations with merchant marketing teams who were not willing to lower the resolution of product images as a tradeoff for a faster site. These days you can now have very high quality models and renders in 3D. Big files for sure, but with cloud based rendering you can keep the models in the cloud. You just download the render.

So I am personally very interested to see how this goes.