VRoid characters in Unity include “Blendshapes” (called “Shape Keys” in Blender) that morph the eyes, eyebrows, and mouth on the face to create different expressions. These work by warping the mesh of the character (moving the contours of the face). Other expressions such as blushing change the texture of the mesh (what is displayed on the mesh), although both can be done at the same time (widen eyes and blush together).

This post focuses on blendshapes, not textures.

Blendshape Clips and Blendshapes

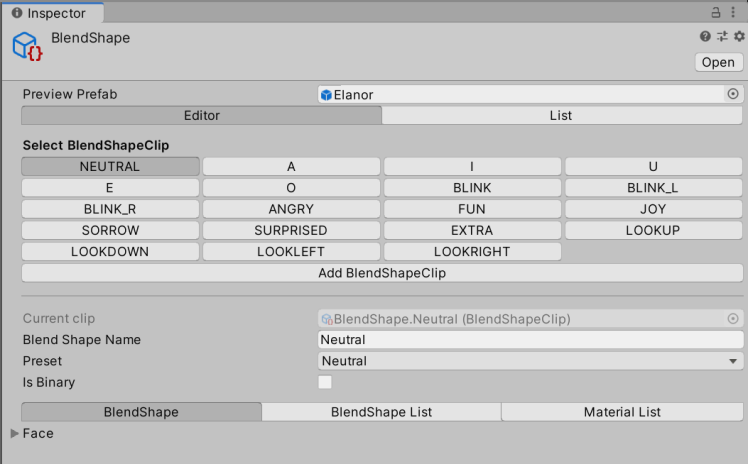

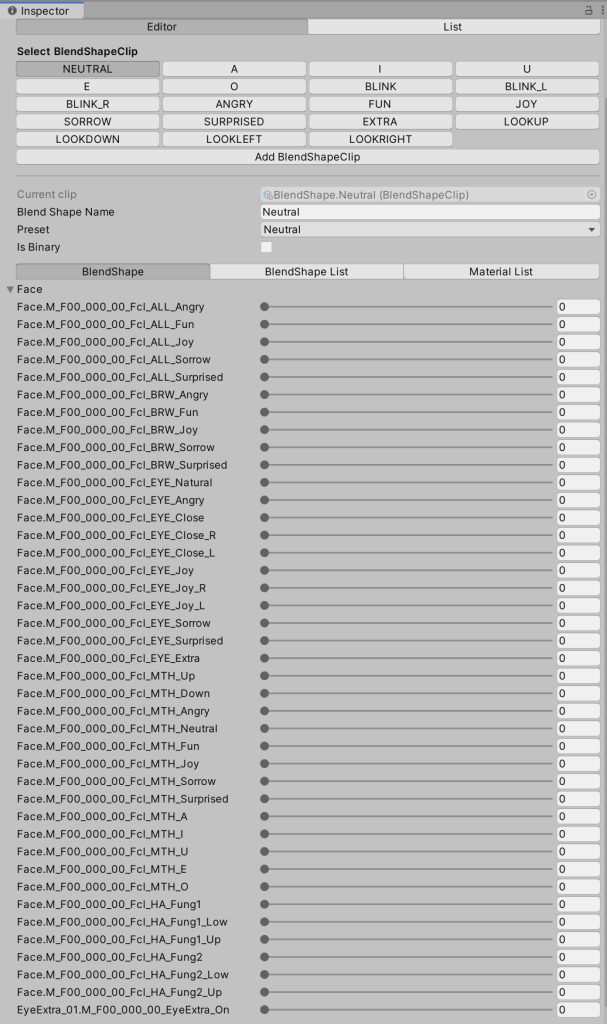

There are several levels to blendshapes you can tap into in Unity. At the top level there are blendshape clips for basic mouth positions (A, E, I, O, U) as well as expressions for angry, fun, joy, sorrow, surprised, and “extra” (an anime style “> <” eye expression). (There are also blendshape clips defined for looking up/down/left/right, but I don’t think they do anything.) I believe these blendshape clips are what is used by applications such as VRchat which allow the user to use a preset emotion with their VRM character.

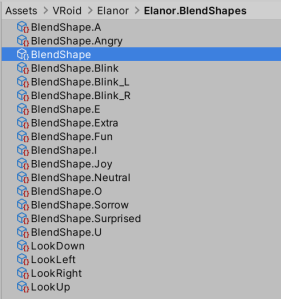

The easiest way to explore the blendshapes for a VRoid character is to look in its asset directory. The following example is for a VRoid character called “Elanor”.

If you open the “Blendshape” asset in the character’s “<character>.Blendshape” directory you will bring up the following definition which includes references to each of the blendshape clips in the same directory.

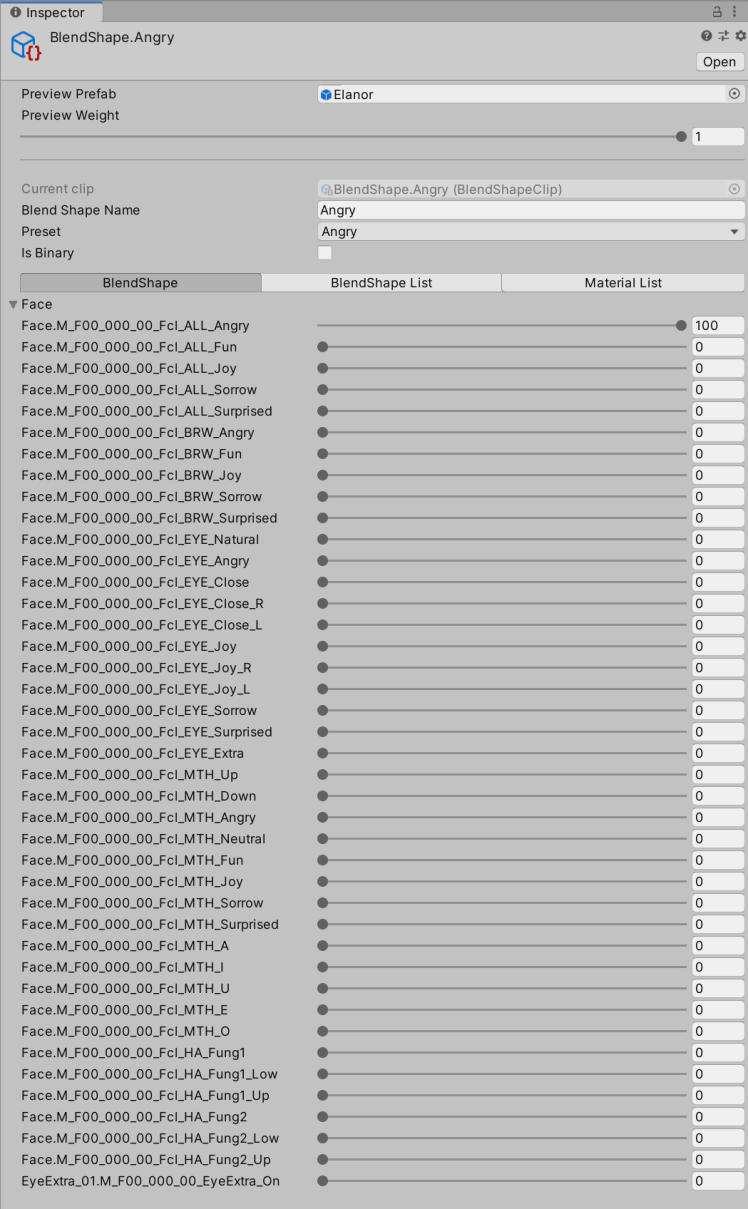

Each of the blendshape clips in the “Select BlendShapeClip” section is linked to a file in the blendshape asset directory. Double clicking on the other files opens up the blendshape clip definition. Here is the definition of “angry”.

You may notice that most (but not all) of the top level blendshape clips have a corresponding blendshape. “Angry” for example sets “Face.M_F000_000_00_Fcl_ALL_Angry” to 100 – it does not adjust any of the other blendshapes.

The VRoid character has a pattern to the blendshape names. The “ALL” blendshapes (“Face.M_F00_000_00_Fcl_ALL_…” to be precise) modify the whole face at once (eyes, eyebrows, and mouth), the “BRW” blendshapes control the eyebrows only, “EYE” controls the eyes, “MTH” controls the mouth, “HA” controls the teeth (fangs), and the last value “EyeExtra” is a special case for the anime “> <” eye expression.

If you don’t like the “angry” definition as is in the “BlendShape.Angry” blendshape clip, you can set the “ALL_Angry” setting to zero, then set the eyes, eyebrows, and mouth individually. For example, if you don’t like the mouth for angry, you might set the “ALL_Angry” value to zero, then set the individual “BRW_Angry” (eyebrow) to 100, “EYE_Angry” (eye) to 100, and “MTH_Angry” (mouth) to 50. Unity merges the various blendshapes together to form the final expression. This is possible because the blendshapes record movements relative to the default pose, allowing the deltas from different blendshapes to be added together.

You may have noticed however that there are “blink” blendshape clips for both eyes (Blendshape.Blink), left eye (Blendshape.Blink_L), and right eye (Blendshape.Blink_R) but no “blink” blendshape (Face.M_F00_000_00_Fcl_ALL_Blink does not exist). This is because the “close” blendshape is used instead. Many of the blendshape clips in the character’s asset directory have the same name as blendshapes, but there is no requirement in Unity to do so.

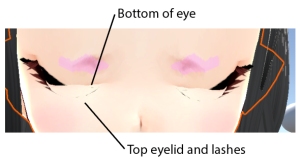

Related to the blendshape clip for blinking, sometimes I find a strength of 100 for the eyes closes them too far, resulting in the eye lashes poking through the skin beneath the eyes.

The solution here is to reduce the EYE_Close value for the blink blendshape clip to 80 rather than 100. The exact value depends on the eye shape settings you used in VRoid Studio. It is pretty hard to create a blendshape that will work perfectly for all settings a user selects inside VRoid Studio.

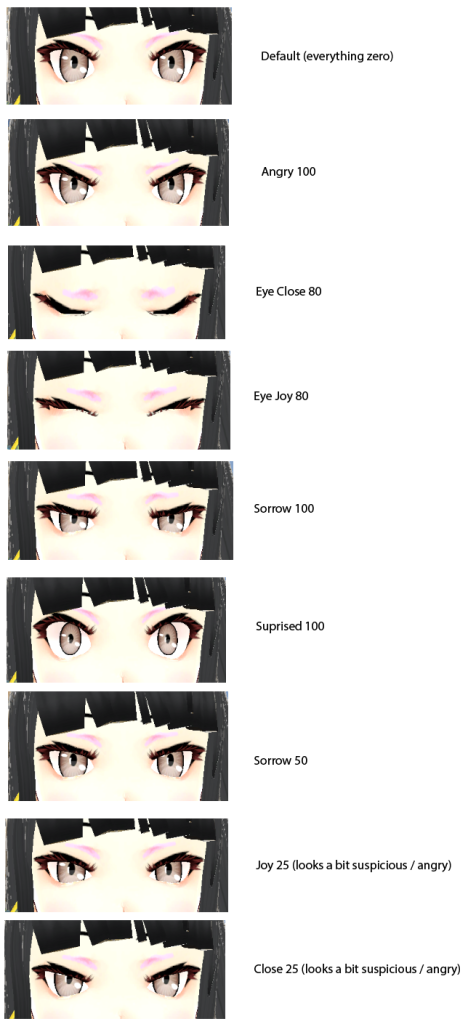

Eye Expression Appearance

So what do the different eye expressions look like? The following are examples of eyes. I used 80 rather than 100 for a few of the blendshapes due to the eyelash problem mentioned above.

There are some expressions that I would like to create that are not covered by the built in blendshapes. For example, I would like a wide-eyed expression like Surprise but where the iris does not get smaller. While you can control the different strengths of blendshapes and mix them together, you cannot use only part of a blendshape. Because the Surprised blendshape for eyes both widens the eyes and shrinks the iris size, you cannot use the Surprised blendshape without it shrinking the iris. That is, I would like the “surprise” feeling, without the “fear” indicated by reduced iris sizes.

Another expression I would like is a sleepy/tired/bored expression where outer edges of the eyes is lowered relative to the inner edge. This would be particularly useful as it could also be blended with other eye expressions, creating more variation in expressions that can be created.

This is an area I am still researching. I believe it should be possible to define new blendshapes for a character using a tool such as Blender, but I have not yet worked out the magic incantation to make it work. (Please leave a comment below if you know of a good article describing how to achieve this!)

Eyebrows

Separate blendshapes are defined for moving the eyebrows (the “BRW” blendshapes).

Examples of eyebrow movements are as follows:

While the eyebrow positions for Fun, Joy, and Surprised look similar, “Joy” is actually a little lower than the other two (which are very close to each other).

Note also that the Sorrow eyebrows may touch the normal eyes at 100 strength, but if the eyes also have the Sorrow blendshape applied, the eyes will also have moved down to create more space.

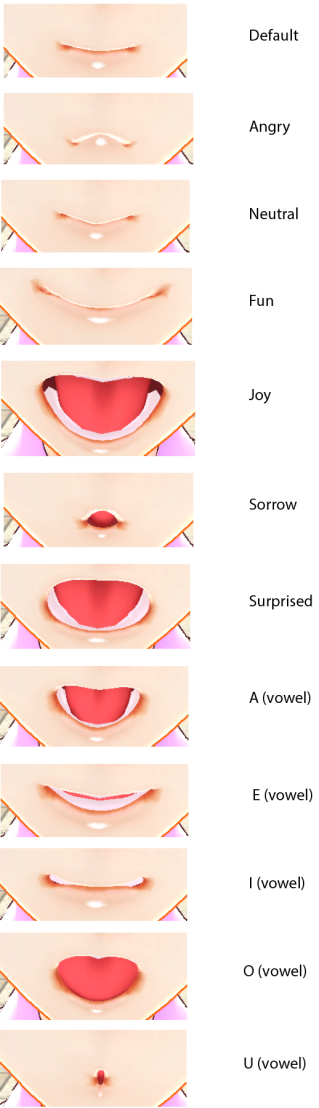

Mouth Blendshapes

Mouths are another example of where I personally would like additional blendshapes. The MTH_Up and MTH_Down blendshapes move the entire mouth up or down without distorting the shape. (I am not sure when you would use this as it makes the character look different.) The other blendshapes for the mouth are as follows.

Having more shapes creates more opportunities to mix and match. For example, “MTH_Angry” lowers the corner of the mouth (but also makes it narrower). But this can be combined with other mouth positions to create more serious versions of other expressions.

Recording Expression Animations

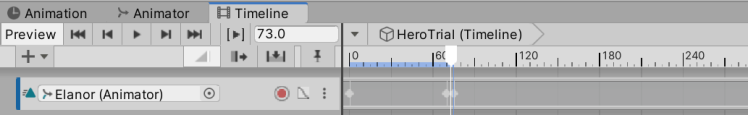

So how to record blendshapes for use in expressions in the timeline? First, open up the timeline window. Then click the red dot next to the animator for your character (drag the character from the scene to the timeline panel and select “Animation Sequence” if not already in the timeline. Set the position marker to the point you want the expression to change.

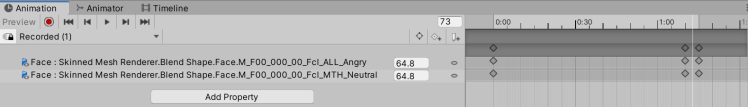

Then go to the scene hierarchy, open up the “Face” of the character, and start modifying blendshape values. With the record flag on, changing the blendshape properties will add new keyframes to the timeline. Double click on the track to expand it in the animation window to look at the properties being recorded more carefully.

In this example I changed Angry to 100, but decided I did not like the expression so also set Neutral for Mouth to 100 at the same time point. This made the mouth not bend downwards quite as far. I then when back in the timeline and set the property values to zero. If you look at the screenshot above, the play head is around half way between the two keyframes. The values shown are 64.8 as this is the blended value between the previous keyframe of 0 and the next keyframe of 100. The distance between the keyframe controls how fast the expression on the face will change.

I should also note that when you start playing the scene in Unity, the BlendShapeProxy script on the character root has additional properties defined (the blendshape clips). These properties I assume are dynamically determined at run time. This however makes them hard to use when creating a timeline as you typically are not in “play” mode when editing animation clips. Changes in “play” mode are typically lost when you exit play mode, so I personally lean away from using that script for creating animations.

Conclusions

This post went through facial expressions using blendshapes and blendshape clips. For animation in a Unity timeline, I ignore the blendshape clips. They are more relevant if you want to load the VRM character into other packages such as VRchat. Instead, I record the Face blendshape property values to cause different expressions to be created.

I still want to look into how to add additional blendshapes to a character (not possible in Unity itself I believe), so I can have a richer set of facial expressions.

I also need to do more research on the best way to lip sync the mouth with dialogue being spoken. Three strategies I know of is to do it manually (very painful if much speech is going on), using a camera to watch your mouth positions and mimic them, and speech analysis where the sound is analyzed to work out the likely phoneme being pronounced. I need to research which approach works best in Unity. I also need to determine if they provide any additional capabilities related to controlling facial expressions. My initial investigations indicate there are solutions in the Unity Asset Store, but they require additional blendshapes to be added to VRoid characters to be able to control their facial expressions fully.

would you be able to write a tutorial for this for blender? I just need to use it in blender…

LikeLike

I would look around the web – I am not very good in blender and might give bad advice. There was so much to learn, which is partly why I gave up on it… also because the tools optimized meshes at times, causing other things to fail. So in the end I gave up on it. What are you trying to achieve? I use HANA_Tool for example with success to get more expressions.

LikeLike

I want to simply change the expressions in blender. I don’t need to use unity for anything 🙂

LikeLike

Love this explanation, would you be aware of any method that can be used to lipsync these expressions with audio files? I get confused as to which blendshapes are compatible with which lipsync utility, so would appreciate if you could help me bring my NPCs to life 😀

LikeLike

I don’t, sorry. I have not used any audio processing for lip sync with Unity. I have only played with iFacialMocap and similar which watch with a camera.

LikeLike